Google Play Experiments Tool – Now in Beta!

2022 has been the year of change for ASO testing tools. Following the iOS 15 release, we were introduced to Product Page Optimization, allowing us to finally be able to test the visual assets of a store listing live on the App Store. As of April 2022, Google Experiments have surprised us with some exciting new features to further improve the testing reliability and experience!

The Google Play console has made three big changes to Store Listing Experiments. These new capabilities will allow developers to have more control with the confidence levels reached and duration of each test, as well as statistically more reliable results when performing future tests.

We’ll take you through the newly updated Store Listing Experiment process step by step. It should be noted that these new features are not yet available to all developers.

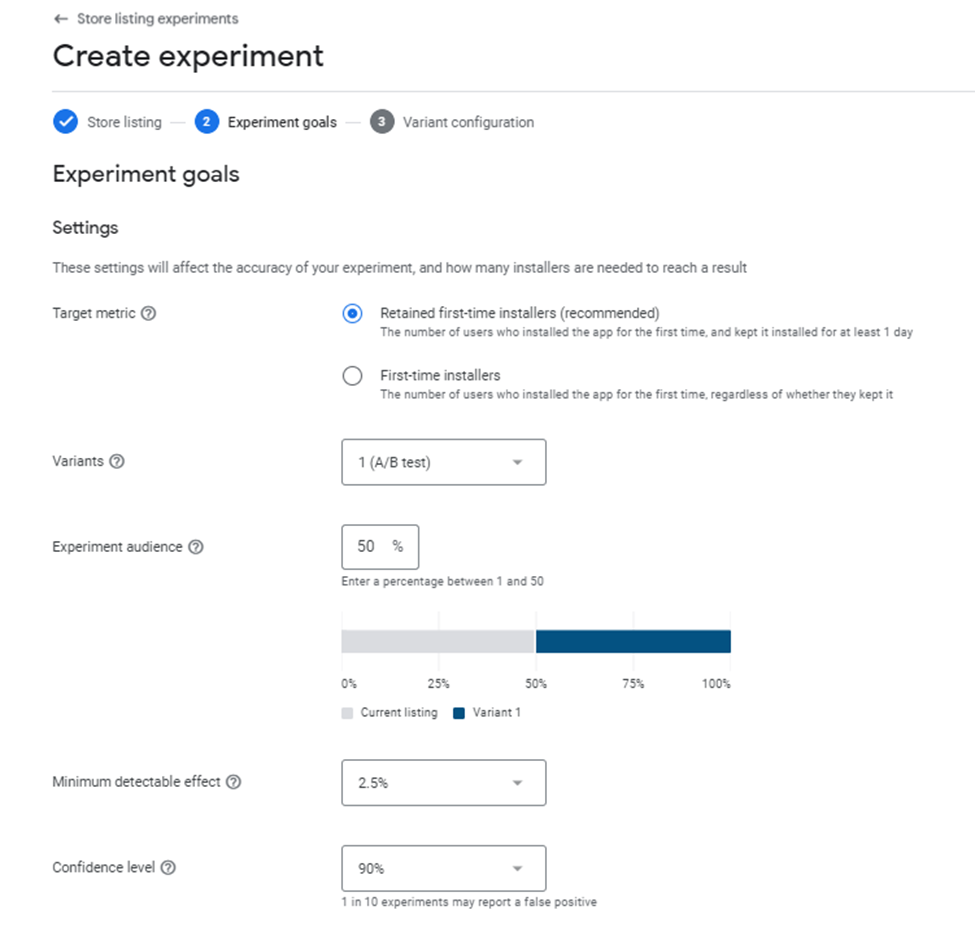

When initially creating an experiment, we start by following the same steps. Naming the test, choosing the store listing, with the experiment type, and choosing whether the test will be for ‘default graphics’ or ‘localized’. We are then led to ‘Experiment Goals’.

In Part 2 of creating an experiment, titled ‘Experiment Goals’, we are asked to define what we want to consider a ‘success’ in results. Here we choose which ‘Target Metric’ we want Google Play to use when determining the experiment’s result. In the legacy tool, we are able to toggle between the metrics only after the experiment is live; in the new tool, we tell Google Play which metric to use in order to determine a ‘winner’.

We’re able to choose between Retained first-time installers (which is recommended by Google) and First-time installers. Retained first-time installers are defined as the number of users who installed the app for the first time, and kept it installed for at least one day. First-time installers are defined as the number of users who installed the app for the first time, regardless of whether they kept it.

It must be noted that neither of these target metrics include Returning Users, and so when deciding upon a target metric, choosing ‘Retained first time users’ will result in having a decreased sample size. We recommend that Apps with a greater brand awareness and a large amount of existing users stick to choosing ‘Retained first-time installers’ as their preferred target metric, as opposed to smaller apps that have less retained users and probably less brand recognition. These smaller apps are better off choosing ‘First-time installers’ as their preferred target metric.

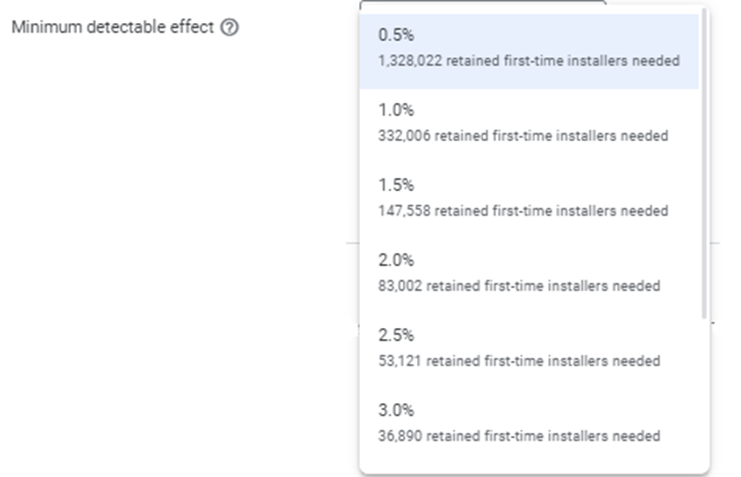

After choosing a Target Metric, we are asked to choose how many variants to test. The fewer variants tested, the less time is needed to complete the experiment. We then choose an Experiment audience, which determines the amount of store listing visitors that will see the variant instead of the current listing. Afterwards, we are tasked with choosing a ‘Minimum detectable effect’, a percentage showing the minimum difference between variants and control required to declare which variant has won (if the difference is less than the percentage chosen, the result will be considered a draw). This ranges from 0.5% to 3%. Google Play also gives a breakdown for how many users are needed to reach this result.

We are then asked to choose a confidence level, ranging from 90%-99%, with a personal breakdown of installers needed to reach this level of confidence. As the percentage of confidence increases, so does the total amount of installers needed to reach this level. Increasing the confidence level will decrease the likelihood of a false positive.

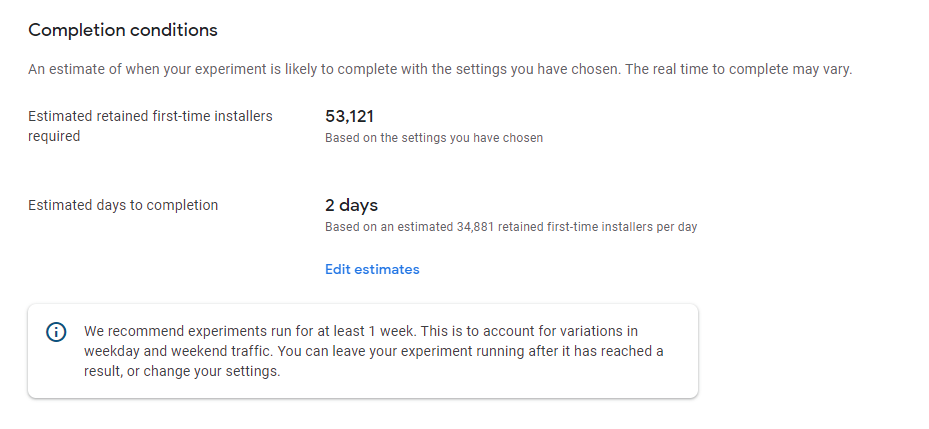

After filling in these goals, we are also given the option to edit the Completion conditions, including the estimated retained/first-time installers and the estimated number of days to completion. Lastly, we are able to choose the variant configuration before submitting the test. These chosen conditions will determine the duration of the test.

With the additional Experiment Goals added to the Google Play Store Listing Experiment, we are now able to estimate samples needed and time of completion needed ahead of time and in a much more accurate way than before, as well as conclude tests with a higher level of confidence.

Looking to become an expert on experiments? Read our blog post about A/B Testing with Google Play.